Machine Learning Model as API with AWS Serverless

Piggybacking Cloud technologies, business processes and activities are undergoing a profound digital transformation. There is an emergence of new technologies and new pathways every day. When the Internet was first introduced, its basic job was to provide information. The objective of search engines have changed drastically from providing just information which was before; to providing most accurate and relatable information in the modern days. Machine Learning in general has helped this cause a lot. Machine Learning in general, is basically an application of artificial intelligence. Thus, machine learning can work and find solutions on it’s own from previous experiences and trends; without explicitly manual user instructions.

Why and how Machine Learning is related to this?

Nowadays, Machine learning models are used in within different platforms. In general terms, any machine learning model can be termed as a mathematical or statistical representation of any real-life problem or situation. All machine learning models consist of huge number of algorithms and training data. Whenever, any certain situation occurs, the algorithm tries to find the best solution by finding different patterns within the training data while corresponding to the different parameters given by the input target. Machine learning as an application and machine learning models go hand in hand while machine learning models do function as a sub-division under the vast umbrella of artificial intelligence. All the big guns in the IT industry like Google, Amazon, IBM and a lot of others are using Machine Learning as one of their greatest tools to deliver different services to their customers.

Why use Machine Learning Model as API?

For understanding this concept, we first need to understand what API is exactly. The full form of API is ‘Application Programming Interface.’ Basically, by using API, two different software can communicate with each other. API, in general terms, is a pack of codes, definitions, protocols, and tools all bundled together for building a software or an application. Being the link between two software, APIs not only allow the software to communicate but send and receive requests as well. This is the main reason to use Machine Learning model as an API for distinctive application building on the cloud. API gateway or in short, API becomes the connecting link to send and receive requests over different servers in different locations, for the ease of work.

The Use of Machine Learning Model as API with AWS Serverless: A Case Study

The Use case

We recently got the chance to help one of our start-up clients from the Oil and Gas research domain. They have to deal with heavy workloads on AWS for their application. This was achieved by training and deploying a lot of machine learning models using AWS SageMaker and AWS EC2. AWS SageMaker is a tool which helps the data scientists and developers to quickly build and deploy applications within a hosted environment. Also, AWS EC2 or elastic Compute Cloud is the tool for providing scalable computing capacity across different virtual servers.

The Problem

For every single model that is going to be trained, deployed and exported, the developers need to launch separate AWS EC2 instances, copy the models over to the machines or to be precise the virtual machines and then again launch Flask or Django based APIs to consume the pickle models. This is exactly where our client was incurring too much cost for running AWS EC2 and SageMaker as they had to launch new virtual machines each time they wanted to run the APIs which are eventually based on different models. Deploying models using the AWS SageMaker dashboard into a secure or scalable environment could be easily done with just one click. But, the billing for the same is done based on the GPU instances and usage by minutes.

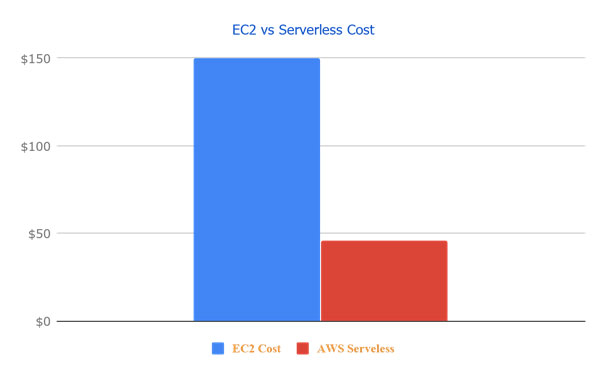

| AWS Services | Cost |

|---|---|

| EC2 (4x t3.medium) | $ 133.3 |

| EBS | $ 15.0 |

| Other AWS services | (including support) $ 2 |

The testing phase:

Whenever our client wanted to test and train a new API, they had to launch a new AWS EC2 instance. Not only this approach was difficult to handle and expensive, but it was also the opposite of what our client had planned for using Machine Learning models. Further tests implied that the EC2 instances were handling fewer numbers of requests per hour. This test was conducted depending on some variables such as API request, response data, and resource utilization. Tests also revealed that the EC2 instances are the reason for 90% of the total cost incurred by the client as the EC2 instances were idle for too long and at times, the virtual machines were serving as few as 10 API requests per hour, or sometimes even less than that.

Our solution to the client

We had to keep in mind that there has to be a minimum number of instances running just to make sure that the application can be accessed from anywhere. Rigorous testing and analysis of APIs along with other variables were done such as the following to make sure that we provide a robust solution to our client:

- Resource Utilization

- Request and response data size

- Size of Python API code

- Execution time (within a range of 200ms ~ 20000ms)

- Memory utilization for processing request with pickle model (that was in a range of 500mb to 2400mb)

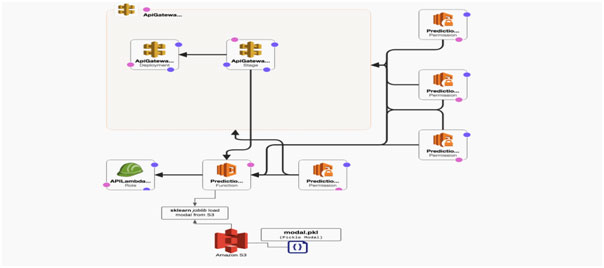

Our decision was to architect the APIs with the use of AWS Serverless components such as:

- AWS Lambda

- API Gateway

- S3 for Storage

- and AWS Cognito

Since our Model were bit heavier on size, we stored them in a S3 Bucket and packed all of the deployable codes for Lambda and deploy using AWS SAM template. The result was- reduced cost incurrence.

| AWS Services | Cost Per month |

|---|---|

| AWS Lambda | $ 15 |

| API Gateway | < $ 26 |

| AWS Cognito for Authentication {Less than 50K users} |

$ 0 |

| AWS S3 | < $5 |

As it is visible from the above chart, the machine learning model with API running costs came down significantly. Plus, it was also possible to serve more requests with an acceptable performance level using the same architecture.

At Loves Cloud, we are constantly leveraging the power of various open source software solutions to automate, optimize, and scale the workloads of our customers. To learn more about our services aimed at digital transformation of your business, check https://www.loves.cloud/.