Docker: A 10000 Ft Overview

With technology making stellar advancements every company is aiming to woo its customers with an experience that is a notch above its competition. The first step to doing that is making use of cutting-edge technology. Now, we know due to exponentially evolving workload, applications have undergone a tremendous transformation.

If a 10-year time period is mapped, we’ll see applications have evolved from monolithic in 2000 to the loosely coupled services today; from the slow-changing ones to the rapidly updated applications of 2019. Likewise, big servers have made way for smaller servers and/or devices.

Today, one physical server is capable of hosting multiple applications and each application runs on a virtual machine.. Docker is a software that allows applications to run in separate environments. It is basically a tool that helps to pack, ship, and run applications within specific ‘containers.’ To understand the significance of containers in modern programming, let’s see first, what they are.

Docker ain’t a silver bullet to fix your application, however, when used correctly, it gives you an optimum resource utilization, process isolation along with making your application portable.

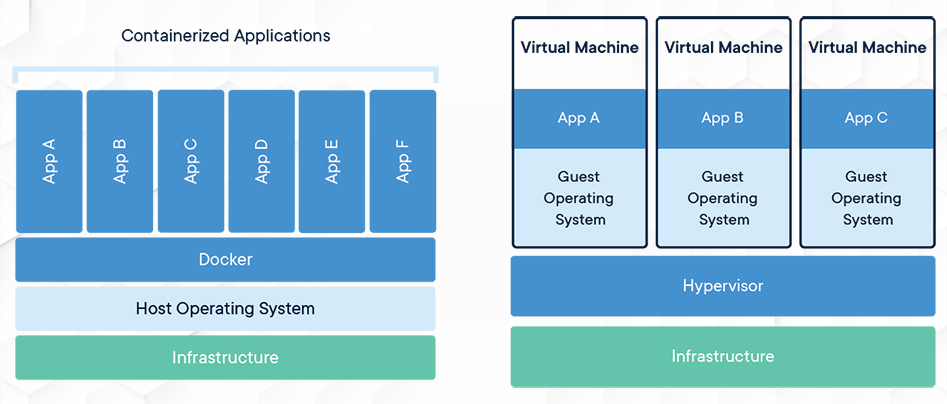

Containers versus Virtual Machines

Containers and Virtual Machines both allow multiple types of software to be run in contained environments.

Virtual Machines, on the other hand, are abstractions of the hardware layer wherein each VM simulates a physical machine that can run software. The Hypervisor allows multiple virtual machines to be run on one machine. Each VM has its own Operating System, applications, binaries, libraries and dependencies.

Containers are an abstraction of the application layer where each container is capable of running an isolated software application while sharing a common Operating System. Containerization of an application involves packaging the application along with all of its related configuration files, libraries and dependencies required for it to run reliably and efficiently across different computing environments. Multiple containers can run on the same machine while sharing the same OS kernel with other containers and each of them runs as isolated processes in user space.

Docker

Docker is an open source tool designed to make it easier to create, deploy, and run applications by using containers.

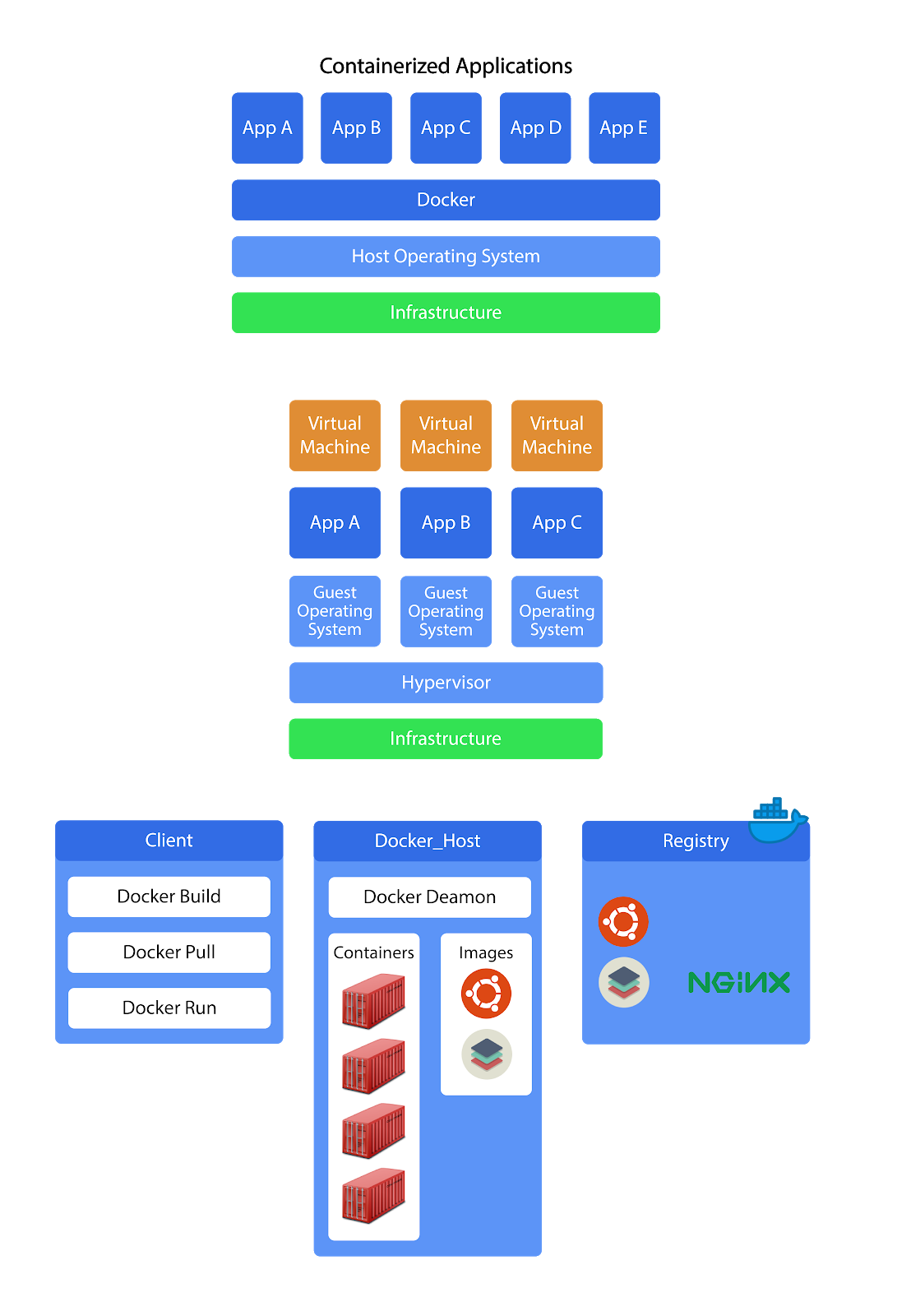

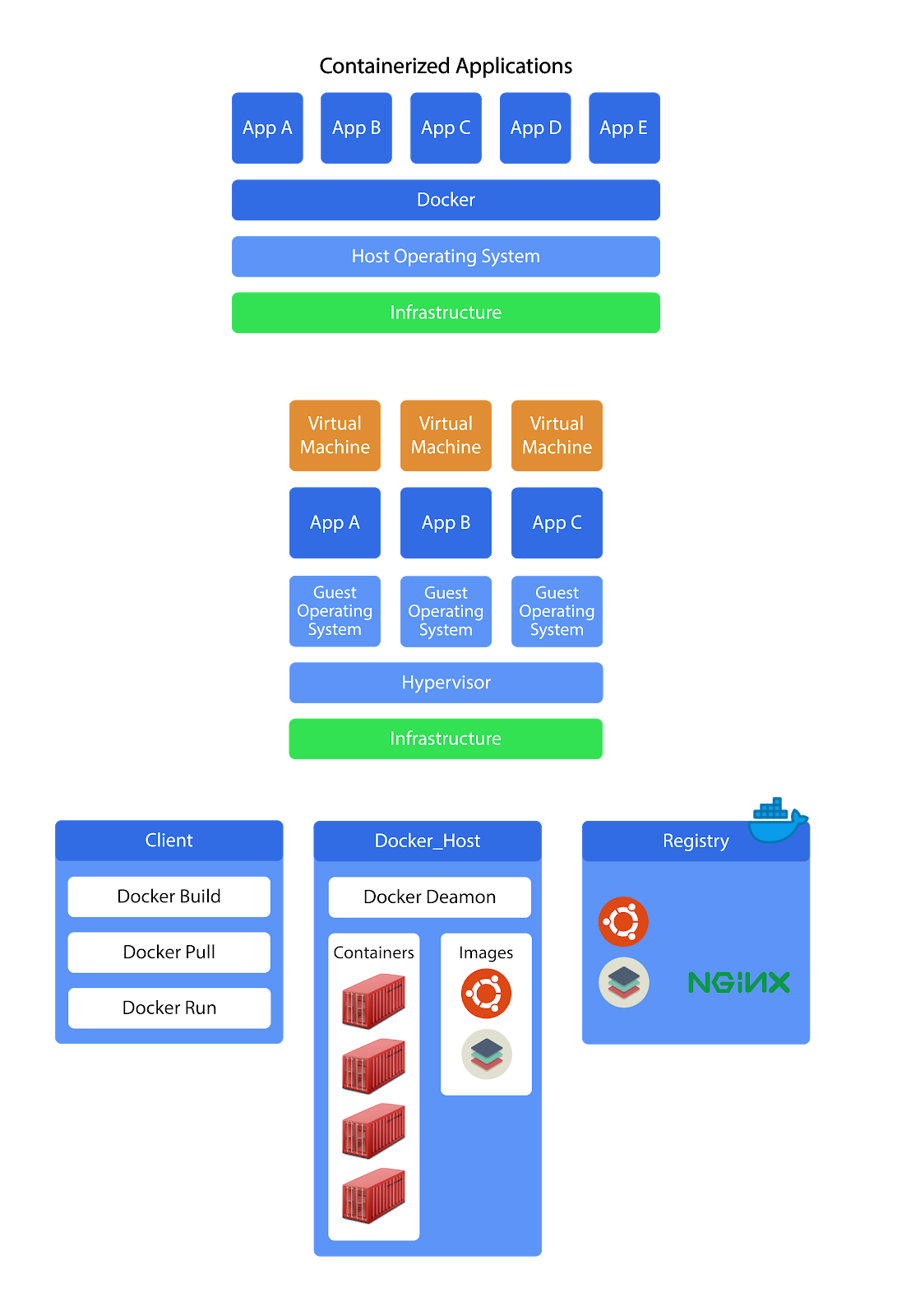

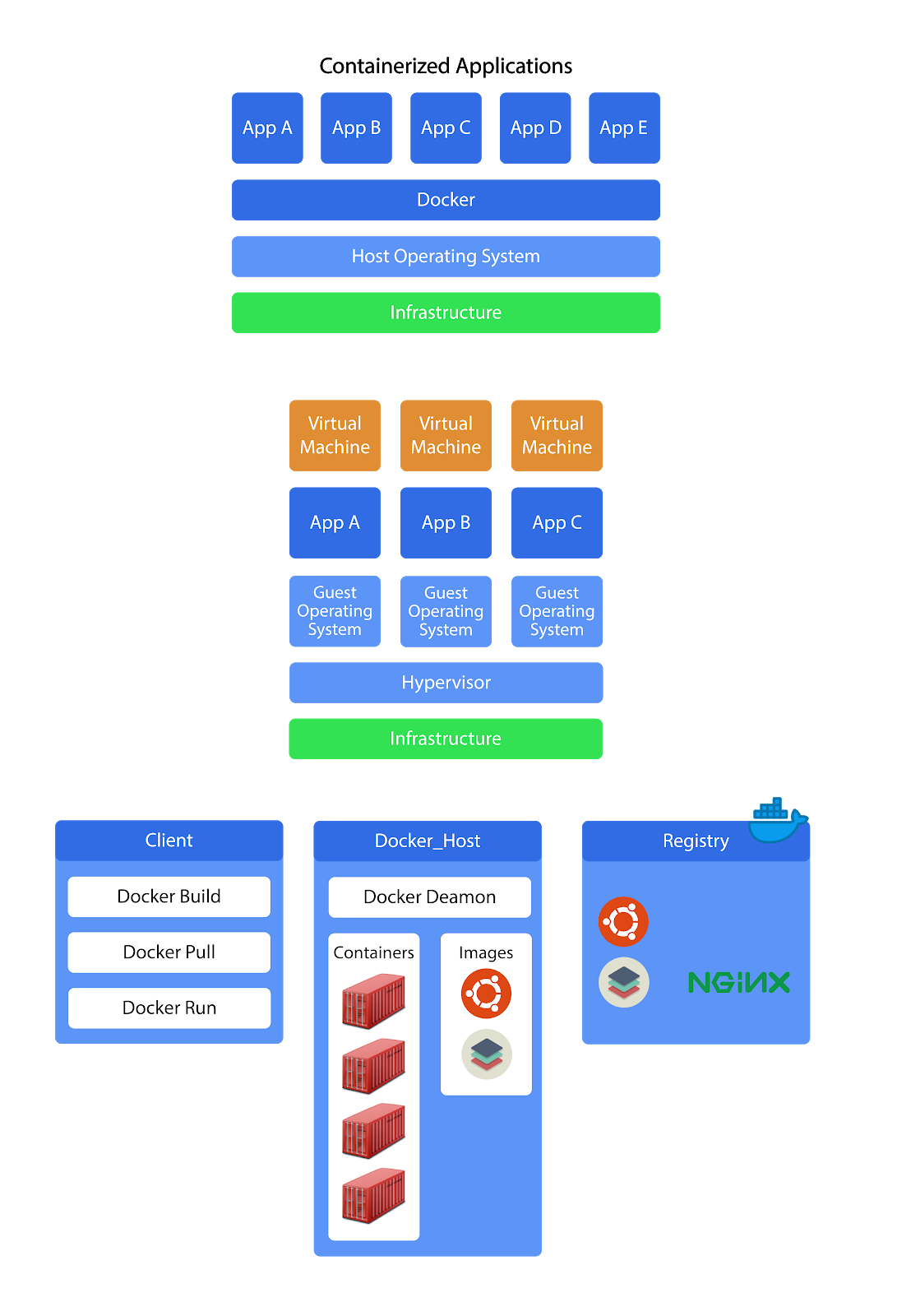

The Docker Architecture

Docker uses client-server architecture, that is, the server hosts, delivers and manages most of the resources and services to be consumed by the client. The Docker client talks to the Docker daemon, which does the heavy-lifting of building, running, and distributing of your Docker containers. The daemon is the server side of the architecture and the clients can connect to it either via REST API, over UNIX sockets or a network interface.

The Client is through which we run Docker commands either through a Command Line Interface or through the Docker API. The Docker Host is the server side of the architecture which runs the Docker daemon. The client and the Docker host can be on the same machine or be hosted on separate servers. The Docker ecosystem also consists of the Docker registry which is a storage and distribution system for named Docker images. Docker registry is further organized into Docker repositories, which is basically a version control system for Docker images.

A repository holds all the versions of a specific image. The registry allows Docker users to pull images locally, as well as push new images to the registry.

Docker Image and Containers

A Docker image is the template (application plus required binaries and libraries) needed to build a running Docker Container. An image is built up from a series of layers where each layer except the very last one is read-only and represents an instruction in the image’s Dockerfile.

A container is an instantiated form of an image and is differentiated by the thin rewritable layer on top of the read only layers of an image. A container is a ‘runnable’ instance of an image and is defined both by its underlying image as well as the additional configuration parameters provided at the time of creating the instance.

All changes to the container that add or modify the existing data or state of the container are stored in the writable layer. When the container is deleted only the rewritable layer is deleted while the image remains unaltered. This enables multiple containers can share access to the same underlying image and yet have their own data state.

Docker is garnering a lot of attention from across the industry. What began with 1146 lines of code has today has breached the billion-dollar evaluation mark. According to a TechCrunch report which referred to the Securities and Exchange Commission filings, Docker, the company has raised US$92 million of a targeted US$192 million funding round. It seems there’s no stopping this “unicorn” right now.

At Loves Cloud, we are leveraging the power of Docker and other open source software solutions to automate, optimize, and scale the workloads of our customers. To learn more about our services aimed at digital transformation of your business, check https://www.loves.cloud/.